Data scientist takes guitar lessons

I decided to take lessons on guitar when I was 39. I had played guitar since age 13 and never took lessons. I found a teacher at a local music store, a jazz guitarist. These lessons gave me the opportunity to learn the fundamentals of music from a true master. I immediately saw every musical shortcoming I had all at once. Reading music was something I had rarely done before. I needed to practice a lot. He said the most important thing when learning a song is to learn the melody. And it is especially good to learn the words and practice to the original recording.

Eastman hollow body jazz guitar i am learning to play

An experienced musician can choose chords that harmonize with the melody, and not always the ones written. From chords, they can improvise a melody that leads its way through the changes. In order for me to do what he showed me, I needed to develop my playing ability, my perceptual skills, and and my creative skills. To truly master musical expression in the way he showed me, I need to be able to perceive the key of music, the melody, and chords, the chord functions. Currently, I can identify key and play it on the instrument. But my ability to recognize chords is limited. How could I grow my ability to identify which chords would harmonize with a melody? I need to practice.

Cover art for the composer of Desafinado plays, by Antonio Carlos Jobim.

I could barely play a melody. So, I started learning the melodies of two songs I like. They were both Tom Jobim tunes. I always loved listening to Desafinado and Chega De Saudade among the jazz MP3’s stashed on a jazz band server at work in 2008. So I learned these bossa nova melodies as points to start trying to learn to improvise. After a few months I fully learned the melodies two these two songs, and chords various other bossa nova songs. And because I was learning new songs, I recorded many tracks of me practicing the melody and chords as written.

As I was practicing technique on guitar learning jazz standard melodies from the real book. Different sources would give different chords to play along to the melody. Why? I knew that there were different chords that were subsets of other chords. But, I found it difficult to recall all the chords related to a given chord. I didn’t have a good way of organizing related chords. Data scientist mode kicks in. With the help of a computer, I could just list all the notes I can play and then enumerate all possible chords and make my own naming system, couldn’t I? Then I could develop a measure of closeness based on shared notes and dissonances to recall related chords or chords that have similar function? This is just like me, to try to solve a problem through computation.

An old laptop. I always try to solve problems with computers.

But that got me thinking... Could I have the computer listen to a melody I’m playing and give me feedback? Could it give me suggestions on what chord changes would work with the melody? Could I make an algorithm detect chords and respond with an improvised melody? While I struggle to play Hanon exercises up and down the neck of my guitar, could I also create an artificially intelligent computer tool capable of understanding music I play and giving me suggestions to improve?

I looked for alternative tools to solve my problem. Various tools can transcribe melodies from recordings. Python sound library librosa can give you the note names in a melody by creating a chromagram. For individual notes, the problem is already solved by looking at the fundamental frequency of a sound. But would it work for multiple notes played at once (chords)? One tool I tried called Chordify gave a free trial. It detected and displayed the chords in Yellow by Coldplay. It seemed that someone had solved this problem of chord detection already. But I didn’t want to pay for it.

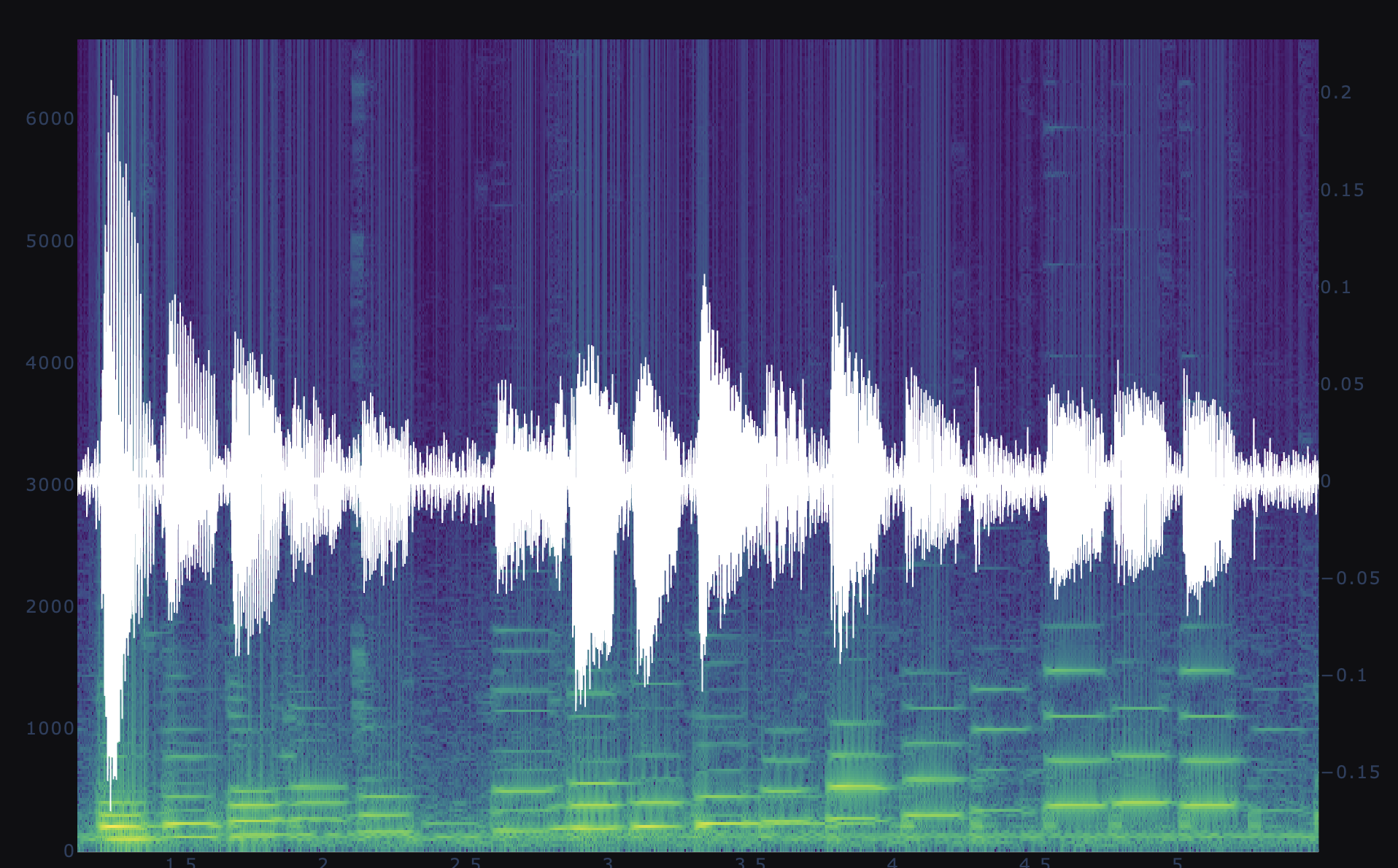

Chromagram of note intensities from Librosa library in Python. Data sources is a G major scale played through two octaves up and down twice.

I stubbornly started engineering a system to identify notes and chords that I could call my own. I started by creating algorithm that is trained on labeled sound data. It would predict notes, intervals, chords, based on labels that I would encode with my knowledge of music theory. So, I could choose to label the sound in many different ways. I could name individual notes. I could name whole chords. I could name intervals that are present. Each method of labeling has advantages and drawbacks. I would create the sounds I wanted to classify with my guitar and I would encode labels about features of the chords. What would predictive models for these labels look like? Would I be able to inspect the models to learn anything about what information is required to identify specific harmonic intervals from spectral data?

Eastman AR605CE guitar with tuneomatic bridge

I spent the summer recording bossa nova on my guitar. I practiced scales and other exercises and created a library of sound data that I started to label. In order to create a prediction algorithm that predicts the label of sound data, what should the input to such a model be? I believe that the information needed to infer label from sound is contained in its frequency spectrum. Why? When sound waves enter the ear, animal brains get stimulation that ultimately comes from nerves in the cochlea. The cochlea is a structure which has the ability to vibrate in response to sound stimulation. The shape of the cochlea combined with its mass and stiffness give it an array of distinct resonant frequencies. These frequencies would in theory be associated with different resonant mode shapes inside the cochlea. As with any resonant structure, the amplitude of vibration in each mode depends on the frequency content of the forcing function. So, I conclude that the information the our brain gets from our hearing apparatus must be contained in the frequency spectrum of the sound. So my algorithm should use some kind of frequency spectrogram as its predictor.

G major scale, Two octaves up and two steps down. Spectrogram and waveform overlayed.

I have created a github repo to store the code I am using to analyze the sound data and to create a UI for sound labeling in python Dash.

Github repo: https://github.com/ubiquitousidea/soundanalyzer